¶ Minime-T5/TXT2VEC

Minime-T5 is a small LLM currently using the T5-Flan model from Google.

It is fast and has low memory usage. It can be run on a CPU and requires about 400 MB of RAM or 400 MB of VRAM when using CUDA.

You can install it within the CHIM.exe launcher under Install Components.

We’re using Minime-t5 for the following tasks:

- Action Triggering: Every user input is sent to the model, which determines if an action is needed. If so, it will add a recommendation to the prompt sent to the AI/LLM. This can be especially useful with less sophisticated LLMs/AIs.

- Memory Offering: Not every user input needs to trigger a memory, so we use this model to decide whether a memory should be triggered.You must enable “AUTO_CREATE_SUMMARYS” in the default profile for this to work.

- Raw Summarization of Goals: For instance, if you state, "Our new goal is to retrieve all words of power," Minime-T5 should add this to the current mission table so that AI NPC’s are aware.

- Oghma Infinium: Lore topic injection into AI NPCs. See the chapter below.

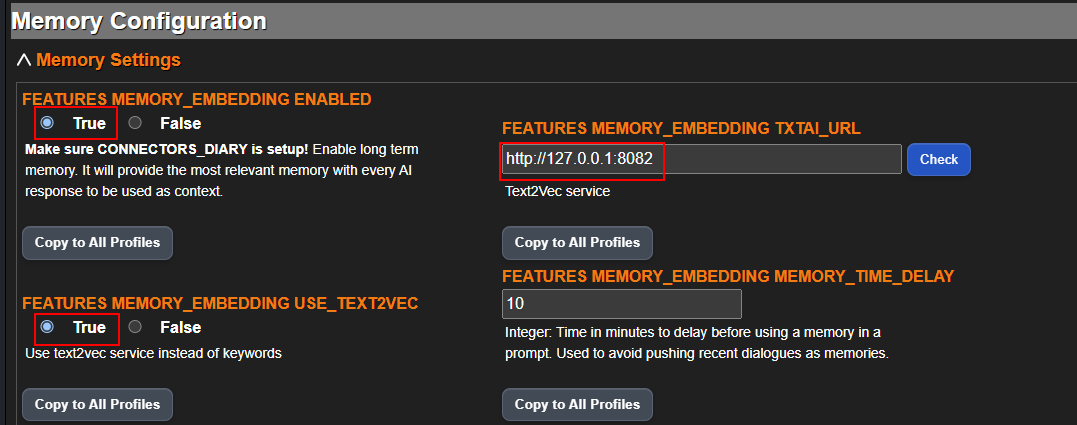

- Text2VEC: Embedding service used to generate accurate RAG vectors for pulling Oghma and memory topics.