¶ KoboldCPP

So a rather common question we get is “Can you run the mod offline without needing to pay to use an API?”. The answer is Yes, but…..

Running an offline LLM is possible thanks to a neat program called Koboldcpp. It can be appealing being able to run the mod on your own hardware and also if you are concerned about data privacy. However it can cause you a lot of headache if you do not know what you are doing.

It has a lot of drawbacks compared to using an online model:

- Limited to using a 7B-13B sized model unless you have a NVIDIA data center in your garage.

- You need a GOOD (we are talking RTX 3090 or better) NVIDIA graphics card to run an LLM, TTS and heavily modded Skyrim on the same machine. Otherwise you will need to use two computers. Plus the electrical bill

- These smaller LLM are dumber at roleplay and may provide a less fun experience compared to a 70B or 405B model hosted online. There is a higher chance that the LLM will break JSON formatting and generate garbage responses.

- You will have to manually debug any errors and understand how HuggingFace LLM’s work.

UNLESS YOU REALLY KNOW WHAT YOU ARE DOING.

WE DO NOT RECOMMEND USING KOBOLDCPP WITH CHIM.

Please just use OpenRouter if it's your first time setting up the mod. It is much much easier to get working.

We have kept it in as a feature because we have a lot of advanced users, who do know what they are doing, who enjoy tinkering with it. But if it's your first time setting up this mod, just use OpenRouter. You will save yourself so much trouble trying to configure an offline LLM compared to the plug and play capability of an online service. For $5 you will get a lot of playtime out of it.

However if you are still determined to use Kobold CPP, then below we have a short guide created by hey_danielx on what you need to do to set up a working configuration.

Users have also noticed that SSE DisplayTweaks helps Kobolod run faster while playing Skyrim.

¶ Setup

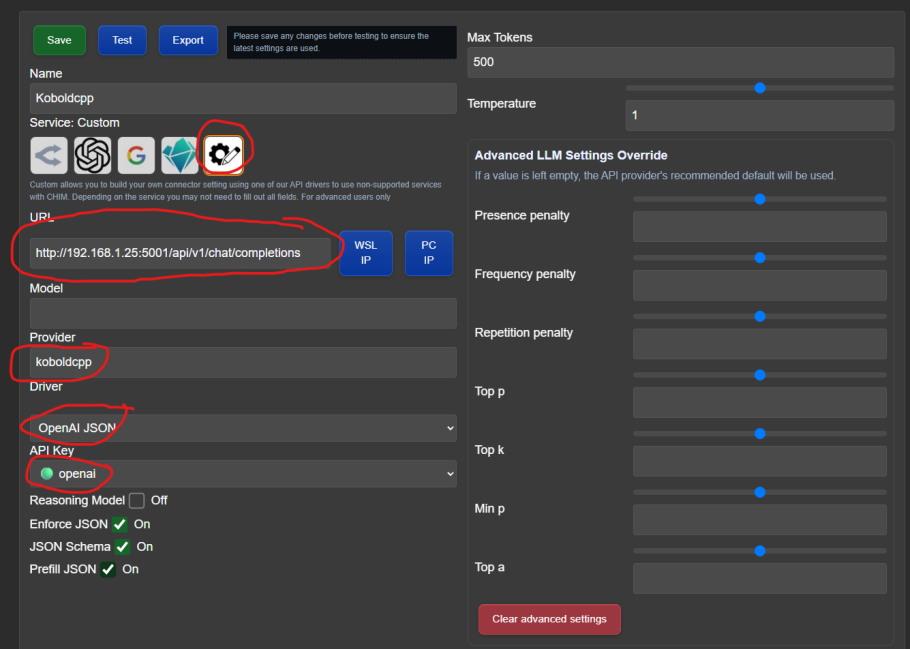

Under LLM Connectors create a new connector. Use the below settings.

Name: Koboldcpp (can be anything)

Custom Service

URL: Click PC IP, ensure port is :5001/api/v1/chat/completions

E.G. http://192.168.1.25:5001/api/v1/chat/completions

Provider: koboldcpp

Driver: OpenAI JSON

Click Save

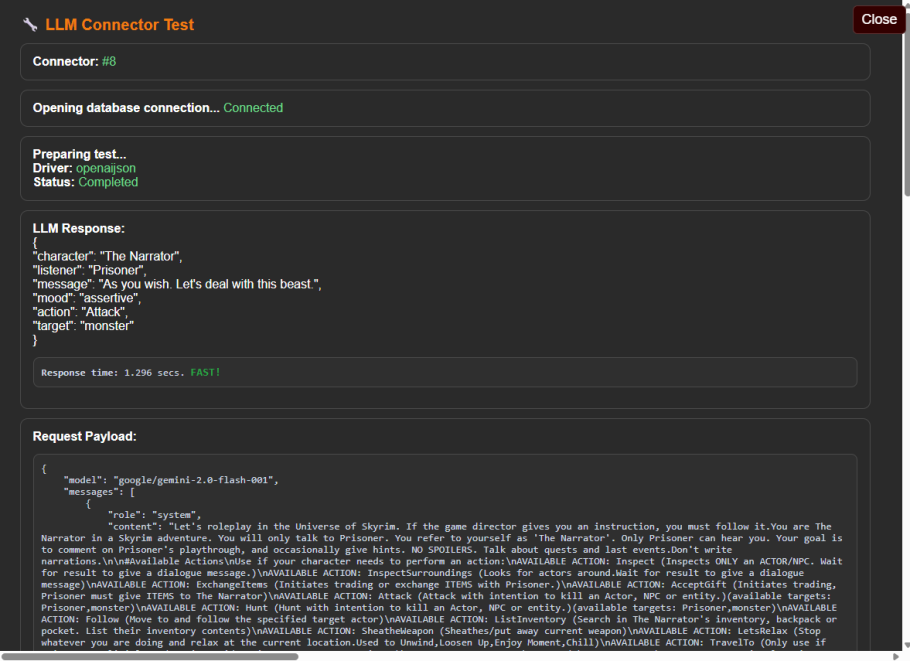

You can verify it works by clicking Test